ArangoDB Hot Backup: Creating Consistent Cluster-Wide Snapshots

Introduction

“Better to have, and not need, than to need, and not have.”

Franz Kafka

Franz Kafka’s talents wouldn’t have been wasted as DBA. Well, reasonable people might disagree.

With this article, we are shouting out a new enterprise feature for ArangoDB: consistent online single server or cluster-wide “hot backups.”

If you do not care for an abstract definition and would rather directly see a working example, simply scroll down to Section “A full cycle” below.

Snapshots of arbitrary sized complex raw datasets, be it file systems, databases, etc.: they are extremely useful for ultra-fast disaster recovery. With ArangoDB 3.5.1, we are introducing the hot backup feature for the RocksDB storage engine, which allows one to do exactly that with ArangoDB.

Interested in trying out ArangoDB? Fire up your cluster in just a few clicks with ArangoDB ArangoGraph: the Cloud Service for ArangoDB. Start your free 14-day trial here

Using the new feature, one can take near-instantaneous snapshots of the entire operation, which includes a single server as well as cluster deployments. These hot backups can be made at very low cost in resources and with a single API call; so that creating and keeping many local hot sets is easily accomplished.

This is also the major difference to the current tool `arangodump`, which can produce a dump of a subset of the collections. However, this comes at a cost. First of all, `arangodump` has to create a complete physical copy of the data and has to go through the usual transport mechanisms to achieve this. Therefore it is much slower, takes a lot more space than a hot backup, and does not produce a consistent picture across collections. `arangodump` is still there and has its use cases, but the realm of near-instantaneous consistent snapshots will be taken care of by hot backups.

Intuitive labeling and an equally efficient restore mechanism to any past compatible snapshot make hot backup simple to use and easily integrated into automation of administration and maintenance of ArangoDB instances.

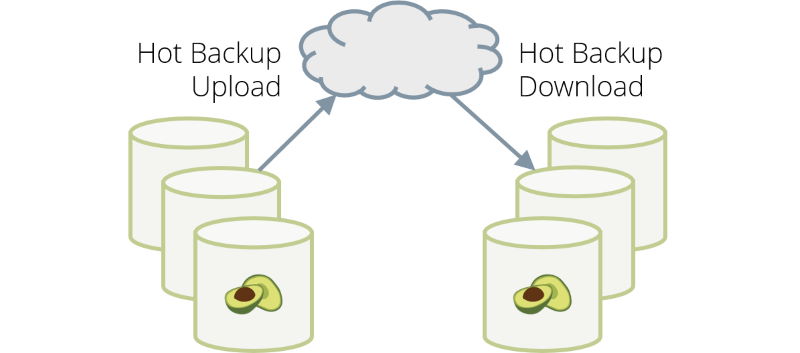

The usefulness of local snapshots is evident, but it would be somewhat limiting if DBAs would have to manage transfers to archiving or to other ArangoDB instances; say for switching hardware or riskless testing of new versions of database applications, etc. That’s why the hot backup comes with the full functionality of the vast feature set of the `rclone` synchronization program for data transfer. `rclone` supports every conceivable standard for secure file transfer in the industry. From locally or on-site mounted file systems to cloud protocols like S3, WebDAV, GCS, you name it.

How is it done?

RocksDB offers the mechanism to create “checkpoints”, a mechanism we have come to rely on for building this feature. RocksDB checkpoints provide consistent read-only views over the entire state of the key-value store. The word view is actually quite accurate, as it sums up the idea that the checkpoints all work on the same datasets, just at a different time.

This has two desirable consequences. Firstly, our hot backups come at a near-zero cost in resources, CPU as well as RAM. Secondly, they can be obtained instantaneously. We have to acquire a global transaction lock, however, to make sure that we isolate a consistent state for the duration of the snapshot.

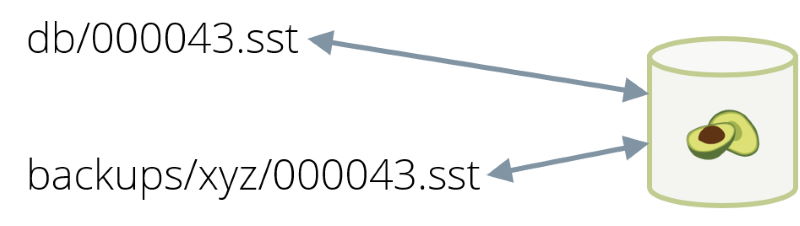

The next ingredients are file system hard links, which allow one to hold on to the same data file over multiple directories as if they were copies in reality, which are then released, however, when no longer needed. This is illustrated in the following image where a file name in the database directory and another file name in one of the backups, both point to the very same piece of data on disk (this is called an inode).

This saves a lot of disk space and the actual piece of data (inode) is only removed when both directory entries are deleted. Using this file system mechanism, one can then minimize the cost of the hot backups in storage space.

The entire process of creating a snapshot on a single ArangoDB instance then goes as follows: Stop all writes to the entire instance, flush the write-ahead log, create the snapshot in time, create a new directory with hard links to all database files, which are necessary for a consistent data layer with the proper labeling.

The restore procedure walks back the above process. Again, one needs to stop IO operations to the entire instance, shift to the directory on a former snapshot and restart the internal database process.

The cluster

Take now the whole procedure to the distributed ArangoDB deployment. Regardless of how you run your ArangoDB cluster, be it in a Kubernetes, using the ArangoDB Starter or in a manual fashion, you will have the whole shebang at your disposal.

Of course, the mere introduction of distribution adds complexity both to the taking of as well as restoring to snapshots, but, and this is a big but, the interaction remains transparent to the user. The API does not change a bit.

I would be lying, though, if I claimed that there is no perceptual difference at all, and this has to do with the global transaction locks to isolate the consistent state. In order to be able to make any consistency guarantees, the locks need now to engulf the entire cluster, which only increases the total time to obtain the lock.

The locking mechanism, however, would not be a good one, if it took a part of the cluster hostage for a long time while other servers are still engaged in other potentially long-running transactions.

So we iteratively try to obtain the lock with growing lock times while releasing the partial lock every time we timeout. If the global lock cannot be gotten, that is after a configurable overall timeout, which defaults at 2 minutes, the snapshot will eventually fail. However, one still can enforce a possibly inconsistent snapshot to be taken, as it might still be worlds away from total data disaster.

One would experience the repeated lock attempts in growing pauses in operation intertwined with normal periods in between.

Limitations

So these hot backups are truly great for ultra-fast disaster recovery, however, as always in life, they don’t come with a free lunch. We need to discuss limitations and caveats.

Hot backups or snapshots are exactly that. They are complete time warps. One cannot restore a single database, let alone a single collection, user, etc. All or nothing. This can also be considered a great thing by the way. One knows, what one gets. The state as it was properly working 20 minutes or 1 month ago or before and vice versa. But still, one needs to appreciate that that would necessitate creating backups of databases, collections, users, etc. independent of a snapshot.

As obvious as it may already be, I cannot stress enough how hot backups don’t replace proper backups, let alone better backups and vice versa.

Also, again this is the nature of a snapshot. One may only restore a snapshot to the identical topology. That means that a snapshot of a 3 node cluster can only be restored to a 3 node cluster, even if it is the same cluster that has changed a bit down the road.

Also, as the raw data files are associated with the raw operations layer above, it is obvious that one cannot use snapshots with file formats, which are not yet or no longer compatible with the running version of ArangoDB, that one wants to restore to. This specifically implies that snapshots created with an ArangoDB 3.5 version might not be usable for a later minor or major version (patch releases within 3.5 will be fine).

All of the above caveats need to be considered carefully when one integrates the hot backups in operation and administration processes.

A full cycle

In this section, we will demonstrate the new feature. Assume you have a cluster running at the endpoint `tcp://localhost:8529`. Then, to take a hot backup you can simply do

arangobackup create

which will answer (after a few seconds):

2019-10-02T10:36:52Z [17934] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T10:36:53Z [17934] INFO [c4d37] {backup} Backup succeeded. Generated identifier '2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290'

2019-10-02T10:36:53Z [17934] INFO [ce423] {backup} Total size of backup: 1296153, number of files: 12As you can see, the total number of bytes the backup files take on disk, the number of data files as well as the number of DBServers at the time when the backup was taken, are reported. Furthermore, the backup has got its own unique identifier, which by default consists of a timestamp and a UUID, in this case

2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290What will happen behind the scenes is that each of my three DBServers has its share of the hot backup in its disk, note that it shares the actual files with the normal database directory, so no new space on disk is needed at this stage. We can display the backup with the `list` command of `arangobackup`:

arangobackup list

which yields:

2019-10-02T10:37:22Z [18018] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T10:37:22Z [18018] INFO [e0356] {backup} The following backups are available:

2019-10-02T10:37:22Z [18018] INFO [9e6b6] {backup} - 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290

2019-10-02T10:37:22Z [18018] INFO [0f208] {backup} version: 3.5.1

2019-10-02T10:37:22Z [18018] INFO [55af7] {backup} date/time: 2019-10-02T10:36:52Z

2019-10-02T10:37:22Z [18018] INFO [43522] {backup} size in bytes: 1296153

2019-10-02T10:37:22Z [18018] INFO [12532] {backup} number of files: 12

2019-10-02T10:37:22Z [18018] INFO [43212] {backup} number of DBServers: 3

2019-10-02T10:37:22Z [18018] INFO [56242] {backup} potentiallyInconsistent: false

As you can see, the above meta information about the backup is shown, too, we will talk about the `potentiallyInconsistent` further down.

However, this is not actually a good backup yet, since it resides on the same machine or even disk, and it even shares files with the database. So we need to upload the data to some safe place, this could, for example, be an S3 bucket in the cloud. Here is how you could achieve this:

arangobackup upload --identifier 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290 --remote-path S3:buck150969/backups --rclone-config-file remote.json

You specify a path for the target repository (just a directory in an S3 bucket). Here, `buck150969` is the name of my S3 bucket and `backups` is a directory name in there. You supply the credentials and the rest of the configuration in a JSON file. Here, we have used a file like this:

{

"S3": {

"type": "s3",

"provider": "aws",

"env_auth": "false",

"access_key_id": "XXXXXXXXXXXXXXXXXXXX",

"secret_access_key": "YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYY",

"region": "eu-central-1",

"location_constraint": "eu-central-1",

"acl": "private"

}

}

(credentials invalidated, of course!)

This is an asynchronous operation, since it can take a while to upload the files. Therefore, this command only initiates the transfer and the actual work will be done in the background:

2019-10-02T11:12:14Z [20728] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:12:15Z [20728] INFO [a9597] {backup} Backup initiated, use

2019-10-02T11:12:15Z [20728] INFO [4c459] {backup} arangobackup upload --status-id=6010501

2019-10-02T11:12:15Z [20728] INFO [5cd70] {backup} to query progress.

However, it does tell you how to track the progress of the upload. You can use this command to get this information:

arangobackup upload --upload-id 6010501

which gives you a nice overview like this:

2019-10-02T11:12:50Z [20286] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:12:50Z [20286] INFO [24d75] {backup} PRMR-455990a9-6f0a-468f-9805-d890341a8ad9 Status: COMPLETED

2019-10-02T11:12:50Z [20286] INFO [68cc8] {backup} Last progress update 2019-10-02T11:12:49Z: 5/5 files done

2019-10-02T11:12:50Z [20286] INFO [24d75] {backup} PRMR-820fbee4-5f0a-4b16-b6ac-d860b84c9f97 Status: COMPLETED

2019-10-02T11:12:50Z [20286] INFO [68cc8] {backup} Last progress update 2019-10-02T11:12:49Z: 5/5 files done

2019-10-02T11:12:50Z [20286] INFO [24d75] {backup} PRMR-83a56ef0-79f8-4036-a147-a766cd139d77 Status: COMPLETED

2019-10-02T11:12:50Z [20286] INFO [68cc8] {backup} Last progress update 2019-10-02T11:12:49Z: 5/5 files done

Here, it was so fast that I only got the completed state. But even for larger backups, eventually, the upload is completed. To demonstrate that and the download operation, we first delete the local copy like this:

arangobackup delete --identifier 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290

If you try this yourself, verify that the backup is indeed gone with the `arangobackup list` command:

arangobackup list

2019-10-02T11:15:19Z [20654] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:15:19Z [20654] INFO [efc76] {backup} There are no backups available.

Let’s now download the backup again. The command is very similar to the `upload` command:

arangobackup download --identifier 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290 --remote-path S3:buck150969/backups --rclone-config-file remote.json

Again, you only get information about how to query progress:

2019-10-02T11:16:14Z [20728] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:16:15Z [20728] INFO [a9597] {backup} Backup initiated, use

2019-10-02T11:16:15Z [20728] INFO [4c459] {backup} arangobackup download --status-id=6010533

2019-10-02T11:16:15Z [20728] INFO [5cd70] {backup} to query progress.

Doing this, you get:

arangobackup download --download-id ...

2019-10-02T11:16:19Z [21033] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:16:19Z [21033] INFO [24d75] {backup} PRMR-455990a9-6f0a-468f-9805-d890341a8ad9 Status: COMPLETED

2019-10-02T11:16:19Z [21033] INFO [68cc8] {backup} Last progress update 2019-10-02T11:16:17Z: 5/5 files done

2019-10-02T11:16:19Z [21033] INFO [24d75] {backup} PRMR-820fbee4-5f0a-4b16-b6ac-d860b84c9f97 Status: COMPLETED

2019-10-02T11:16:19Z [21033] INFO [68cc8] {backup} Last progress update 2019-10-02T11:16:17Z: 5/5 files done

2019-10-02T11:16:19Z [21033] INFO [24d75] {backup} PRMR-83a56ef0-79f8-4036-a147-a766cd139d77 Status: COMPLETED

2019-10-02T11:16:19Z [21033] INFO [68cc8] {backup} Last progress update 2019-10-02T11:16:17Z: 5/5 files done

After successful completion, the backup is there again:

arangobackup list

2019-10-02T11:16:24Z [21059] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:16:24Z [21059] INFO [e0356] {backup} The following backups are available:

2019-10-02T11:16:24Z [21059] INFO [9e6b6] {backup} - 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290

2019-10-02T11:16:24Z [21059] INFO [0f208] {backup} version: 3.5.1

2019-10-02T11:16:24Z [21059] INFO [55af7] {backup} date/time: 2019-10-02T10:36:52Z

2019-10-02T11:16:24Z [21059] INFO [43522] {backup} size in bytes: 1296153

2019-10-02T11:16:24Z [21059] INFO [12532] {backup} number of files: 12

2019-10-02T11:16:24Z [21059] INFO [43212] {backup} number of DBServers: 3

2019-10-02T11:16:24Z [21059] INFO [56242] {backup} potentiallyInconsistent: false

And now we can of course have our big moment, the restore. If you try this at home, simply modify your database cluster by deleting or adding stuff and then do a restore:

arangobackup restore --identifier 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290and again after just a few seconds, you get something like this:

2019-10-02T11:17:58Z [21103] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:18:17Z [21103] INFO [b6d4c] {backup} Successfully restored '2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290'

indicating that the restore is complete. If you now inspect the data in your database, you will see the state as it was when you took the hot backup. It remains to show you one more thing, which is crucial to achieving a consistent snapshot across your cluster. We need to get a global transaction lock, that is, we have to briefly stop all writing transactions – on all DBServers at the same time. That means that if a longish write operation is ongoing, we cannot do this short term. Let me demonstrate.

First, execute in `arangosh` a long running writing transaction:

c = db._create("c",{numberOfShards:3});

db._query("FOR i IN 1..25 INSERT {Hello: SLEEP(1)} INTO c RETURN NEW").toArray();

This will take some 25 seconds since the `SLEEP` function used to fabricate a document sleeps one second. The write transaction for this query will also take this long.

If you try to create a hot backup during this time (I intentionally lower the timeout of how long to try):

arangobackup create --max-wait-for-lock 5

you will get a disappointing result after 5 seconds (provided the above query is still running):

2019-10-02T11:19:15Z [21707] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:19:21Z [21707] ERROR [8bde3] {backup} Error during backup operation 'create': got invalid response from server: HTTP 500: failed to acquire global transaction lock on all db servers: RocksDBHotBackupLock: locking timed out

Within the upper limit of 5 seconds, it was not possible to halt all write transactions since there was one which was running for 25 seconds.

One way to solve this problem is to stop writes from the client side, or by switching the cluster into read-only mode. This will ensure that you can take a consistent hot backup across your cluster.

If you cannot interrupt your writes at all but you do not really care about the consistency, you can use this switch:

arangobackup create --max-wait-for-lock 5 --allow-inconsistent true

and it will – even if the above query is still running – create a hot backup, but warns you:

2019-10-02T11:19:45Z [21740] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:19:51Z [21740] WARNING [f448b] {backup} Failed to get write lock before proceeding with backup. Backup may contain some inconsistencies.

2019-10-02T11:19:51Z [21740] INFO [c4d37] {backup} Backup succeeded. Generated identifier '2019-10-02T11.19.45Z_836880e3-698a-46c7-8622-7ed99d610be1'

2019-10-02T11:19:51Z [21740] INFO [ce423] {backup} Total size of backup: 2528075, number of files: 15

Note that this is also reflected in the backup list:

arangobackup list

gives you:

2019-10-02T11:20:09Z [21777] INFO [06792] {backup} Server version: 3.5.1

2019-10-02T11:20:09Z [21777] INFO [e0356] {backup} The following backups are available:

2019-10-02T11:20:09Z [21777] INFO [9e6b6] {backup} - 2019-10-02T10.36.52Z_db25c274-10ed-4c17-9628-972492fda290

2019-10-02T11:20:09Z [21777] INFO [0f208] {backup} version: 3.5.1

2019-10-02T11:20:09Z [21777] INFO [55af7] {backup} date/time: 2019-10-02T10:36:52Z

2019-10-02T11:20:09Z [21777] INFO [43522] {backup} size in bytes: 1296153

2019-10-02T11:20:09Z [21777] INFO [12532] {backup} number of files: 12

2019-10-02T11:20:09Z [21777] INFO [43212] {backup} number of DBServers: 3

2019-10-02T11:20:09Z [21777] INFO [56242] {backup} potentiallyInconsistent: false

2019-10-02T11:20:09Z [21777] INFO [9e6b6] {backup} - 2019-10-02T11.19.45Z_836880e3-698a-46c7-8622-7ed99d610be1

2019-10-02T11:20:09Z [21777] INFO [0f208] {backup} version: 3.5.1

2019-10-02T11:20:09Z [21777] INFO [55af7] {backup} date/time: 2019-10-02T11:19:45Z

2019-10-02T11:20:09Z [21777] INFO [43522] {backup} size in bytes: 2528075

2019-10-02T11:20:09Z [21777] INFO [12532] {backup} number of files: 15

2019-10-02T11:20:09Z [21777] INFO [43212] {backup} number of DBServers: 3

2019-10-02T11:20:09Z [21777] INFO [56241] {backup} potentiallyInconsistent: true

This is where the above mentioned `potentiallyInconsistent` flag comes into play. If you have allowed inconsistent backups, then this flag tells you that indeed the backup could potentially be inconsistent across multiple DBServers.

Conclusion

We believe that hot backup is an important and very valuable new feature, so:

Life is still an optimization problem, but the problems have become much more benign 🙂

4 Comments

Leave a Comment

Get the latest tutorials, blog posts and news:

Skip to content

Skip to content

This is a nice feature to have. Using foxx-apps: Is their state (versions, config, …) preserved as well? In an environment using foxx, a data only snapshot is not sufficient and would require additional work to capture that state as well.

Foxx apps are snapshotted and restored in the cluster only so far.

Is there any way to get this to work between datacenters?

Of course you could use snapshots to replicate clusters and surely across datacenters. But you would have to live with potentially large lags and low replication rates. You might want to also consider our solutions, which is precisely meant for that purpose: https://www.arangodb.com/docs/stable/architecture-deployment-modes-dc2-dc.html