Time Traveling with Graph Databases: A Simplified Tutorial

A while back, we posted an in-depth article explaining how to performantly store and query graph revision data in ArangoDB. That article was heavy on the technical details, but light on straightforward examples. In this tutorial on time traveling with graph databases, we will leave behind most of the theory and help you get started with managing actual graph revision data by walking you through a simple use-case.

We will assume a basic familiarity with storing and querying graph and document data in ArangoDB using our arangosh tool.

A Simple Application

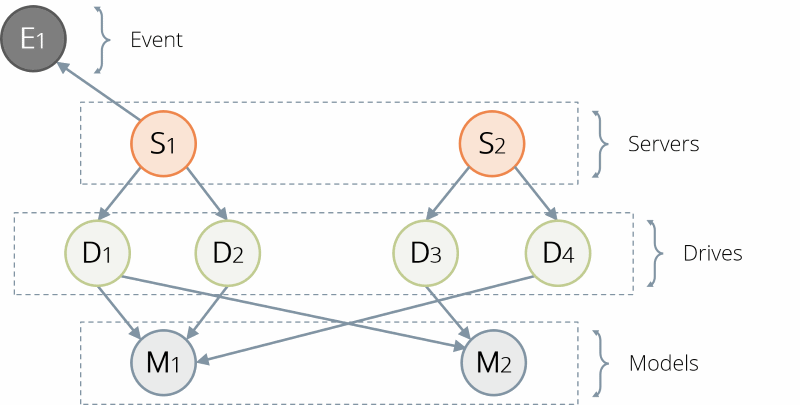

Suppose we are managing a small data center, and want to keep track of the components in servers, and how they change over time as we replace or upgrade systems. We might model this by creating vertices of different types to represent each server, each type of component (e.g. one vertex to represent each model of hard drive used), and each instance of a component (e.g. an individual physical hard drive). Furthermore, we may wish to log failure and maintenance events, and connect all of these vertices together by edges to connect an event to its server and to connect each instance of a component to both it’s model number and the server in which it is used. See below for a representation of this data model:

This would allow us to use a simple graph traversal to examine a particular failure event like a hard drive failure, determine the model number involved, and check which other machines use the same type of drive and thus are potentially at elevated risk for a failure.

Additionally, if we are storing all the temporal and revision data, like when an error occurs, when drives are added, removed, or replaced, when servers are brought online, etc., then we can look at our datacenter at any point in time. We can exclude past configurations from queries about the present time, or we can look back to see which configuration was live when a particular error occurred.

Basics for Time Travel

In this time traveling with graph databases tutorial, we will introduce the basic techniques for storing and querying the revision data performantly. These are covered in much greater detail in the original article. The three key notions are time-slicing, copy-on-write, and proxies.

Each vertex and edge will be augmented with two timestamps. The first will be called created and hold the timestamp denoting its creation time. The other will be called expired, denoting a time when it became invalid. We will use a placeholder value equal to the maximum representable integer to indicate it has not expired yet. The act of time-slicing is simply filtering the graph based on a given query time t, such that we return only data with values created <= t < expired.

When we wish to delete an edge or vertex, instead of actually deleting it, we simply expire it by setting the expired value to the current timestamp. When we wish to modify an edge or vertex, we expire the current version and create a new copy with the modified data. These two changes describe copy-on-write semantics.

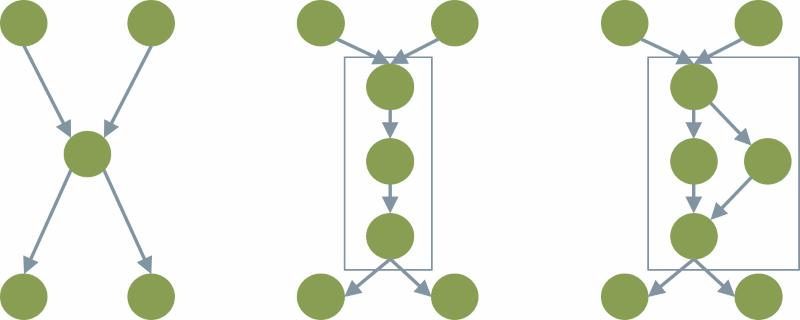

Copy-on-write introduces a possible performance pitfall. When creating a copy of a vertex, we would also need to copy all the incident edges. Since the number of edges could be very large, this could become expensive. Instead, we will use proxies to connect our vertices with their incident edges. In this transformation, each vertex in our application is joined by a single edge to an in-proxy vertex and to an out-proxy vertex. Then, each edge in our application connects the out-proxy of its source vertex to the in-proxy of its target vertex. In this way, when we modify a vertex, we need only copy it, and it’s proxy edges. The proxy vertices remain stable with respect to application edges.

Below is a demonstration of the proxy vertex transformation. The image on the left is our original graph. Let’s focus on the vertex in the center. If we add proxy vertices, we expand this into three vertices, with a single edge connecting the original vertex to each proxy, yielding the configuration in the center. If we wish to then make a change to the logical vertex, we need only create a new vertex and connect it to the proxy vertices, as demonstrated on the right.

Because of the copy-on-write semantics, we may have multiple physical copies of each logical vertex or edge. Each will receive an auto-generated _key value, but we may wish to use some persistent identifier across the copies. This also gives us an additional way to quickly lookup proxy vertices for a given logical vertex. We’ll see some examples of this later on.

Finally, with the proxies, we can move the timestamps of the vertices themselves, and onto the incident edges. Recall that each vertex will have exactly one incident edge in each direction connecting it to its proxies, which must be traversed to include the vertex itself in a traversal.

Data

Let’s go ahead and populate our database with a bit of data. First, let’s set up our collections.

db._create('proxy_in');

db._create('proxy_out');

db._create('servers');

db._create('drives');

db._create('models');

db._create('events');

db._createEdgeCollection('relations');These utility functions will make it a bit easier to populate our data. We can use them as simple pre-processors for our insertions.

const addTimestamps = (edge, created, expired) => {

return Object.assign(edge, {

created: created ? created : Date.now(),

expired: expired ? expired : Number.MAX_SAFE_INTEGER

});

};

const makeProxies = (docs) => {

return docs.map((doc) => {

return {id: doc.id};

});

};

const joinSets = (sources, targets, template) => {

let result = [];

const total = Math.min(sources.length, targets.length);

for (let i = 0; i < total; i++) {

result.push(Object.assign({}, template, {

_from: sources[i]._id,

_to: targets[i]._id

}));

}

return result;

};Now we will write several documents transactionally. We will choose to use the same created timestamp for all of them, so that the transactionality is preserved with respect to time-slicing.

{

const trx = db._createTransaction({ collections: { write: [

'proxy_in', 'proxy_out', 'servers', 'drives', 'models', 'relations'

]}});

const now = Date.now();

const pin = trx.collection('proxy_in');

const pout = trx.collection('proxy_out');

const cs = trx.collection('servers');

const cm = trx.collection('models');

const cd = trx.collection('drives');

const rel = trx.collection('relations');

const serversRaw = [

{id: 'f8a3075e-beea-467c-879f-01eed361fd5b'},

{id: '3035fab7-35f9-49d7-b56b-23d8712b5961'}

];

const servers = cs.save(serversRaw);

const serversIn = pin.save(makeProxies(serversRaw));

const serversOut = pout.save(makeProxies(serversRaw));

rel.save(joinSets(serversIn, servers, addTimestamps({type: 'proxy'}, now)));

rel.save(joinSets(servers, serversOut, addTimestamps({type: 'proxy'}, now)));

const modelsRaw = [

{id: 'f691d0d6-7c28-4aa3-951b-4479af745a17', model_no: 'WD60EFRX'},

{id: '24e9ba8c-6132-403f-9bfd-3c13d18eed7e', model_no: 'ST6000DX000'}

];

const models = cm.save(modelsRaw);

const modelsIn = pin.save(makeProxies(modelsRaw));

const modelsOut = pout.save(makeProxies(modelsRaw));

rel.save(joinSets(modelsIn, models, addTimestamps({type: 'proxy'}, now)));

rel.save(joinSets(models, modelsOut, addTimestamps({type: 'proxy'}, now)));

const drivesRaw = [

{id: '959c6126-4687-47e5-87cd-608c74b92168'},

{id: '42abcbfc-7f4e-4f4b-b7dc-c1fe792c2940'},

{id: '7154e8aa-19b8-4c8f-af2f-2181bd989b3b'},

{id: 'eb626e7d-23e2-4441-8fb1-4de283aad078'},

{id: '1c1740a8-7c57-47b9-970a-db8245762a54'},

{id: '9051f988-69f1-44d8-906c-56d38f3100f4'}

];

const drives = cd.save(drivesRaw);

const drivesIn = pin.save(makeProxies(drivesRaw));

const drivesOut = pout.save(makeProxies(drivesRaw));

rel.save(joinSets(drivesIn, drives, addTimestamps({type: 'proxy'}, now)));

rel.save(joinSets(drives, drivesOut, addTimestamps({type: 'proxy'}, now)));

const modelRelations = [

addTimestamps({type: 'instance_of', _from: drivesOut[0]._id, _to: modelsIn[0]._id}),

addTimestamps({type: 'instance_of', _from: drivesOut[1]._id, _to: modelsIn[0]._id}),

addTimestamps({type: 'instance_of', _from: drivesOut[2]._id, _to: modelsIn[0]._id}),

addTimestamps({type: 'instance_of', _from: drivesOut[3]._id, _to: modelsIn[1]._id}),

addTimestamps({type: 'instance_of', _from: drivesOut[4]._id, _to: modelsIn[1]._id}),

addTimestamps({type: 'instance_of', _from: drivesOut[5]._id, _to: modelsIn[1]._id})

];

rel.save(modelRelations);

const serverRelations = [

addTimestamps({type: 'contains', _from: serversOut[0]._id, _to: drivesIn[0]._id}),

addTimestamps({type: 'contains', _from: serversOut[0]._id, _to: drivesIn[1]._id}),

addTimestamps({type: 'contains', _from: serversOut[0]._id, _to: drivesIn[2]._id}),

addTimestamps({type: 'contains', _from: serversOut[1]._id, _to: drivesIn[3]._id}),

addTimestamps({type: 'contains', _from: serversOut[1]._id, _to: drivesIn[4]._id}),

addTimestamps({type: 'contains', _from: serversOut[1]._id, _to: drivesIn[5]._id}),

];

rel.save(serverRelations);

trx.commit();

}Time-slicing

We can now use time-slicing to query which drive models are used in a given server at the present moment.

{

const query = `

FOR s IN proxy_in

FILTER s.id == @serverId

FOR v, e, p IN 7..7 OUTBOUND s relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

FILTER p.edges[*].created ALL <= @queryTime && p.edges[*].expired ALL > @queryTime

RETURN v

`;

const params = {

serverId: 'f8a3075e-beea-467c-879f-01eed361fd5b',

queryTime: Date.now()

};

db._query(query, params).toArray();

}This should return something like the following.

[

{

"_key" : "136",

"_id" : "models/136",

"_rev" : "_Z-TXTDy--C",

"id" : "f691d0d6-7c28-4aa3-951b-4479af745a17",

"model_no" : "WD60EFRX"

}

]

Copy-on-write

Let’s suppose we want to add additional information to one of our server records, such as a server IP. To do this, we need to first expire the document, then write the new copy. We will use a function to help us.

const expire = (trx, ids, expired) => {

if (expired === undefined || expired === null) {

expired = Date.now();

}

const query = `

FOR proxy IN proxy_in

FILTER proxy.id IN @ids

FOR v, e, p IN 2..2 OUTBOUND proxy relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

FILTER p.edges[*].expired ALL == @maxTimestamp

FOR edge IN p.edges

UPDATE edge WITH {expired: @expired} IN relations

`;

const params = {

expired,

ids,

maxTimestamp: Number.MAX_SAFE_INTEGER

};

trx.query(query, params);

};

const strip = (doc) => {

const stripped = Object.assign({}, doc);

if (stripped._key !== undefined) {

delete stripped._key;

}

if (stripped._id !== undefined) {

delete stripped._id;

}

if (stripped._rev !== undefined) {

delete stripped._rev;

}

return stripped;

};

{

const trx = db._createTransaction({collections: {

read: ['proxy_in', 'proxy_out'],

write: ['servers', 'relations']

}});

const now = Date.now();

const cs = trx.collection('servers');

const rel = trx.collection('relations');

const query = `

FOR doc IN proxy_in

FILTER doc.id == @serverId

FOR v, e, p IN 2..2 OUTBOUND doc relations

FILTER p.edges[*].expired ALL == @maxTimestamp

RETURN p.vertices

`;

const params = {

serverId: 'f8a3075e-beea-467c-879f-01eed361fd5b',

maxTimestamp: Number.MAX_SAFE_INTEGER

};

const docs = trx.query(query, params).toArray()[0];

print(docs);

// mark the old version expired

expire(trx, [docs[1].id], now);

// generate and save the new version

const revised = cs.save(Object.assign(strip(docs[1]), {ip: '10.10.10.42'}));

const edges = [

addTimestamps({type: 'proxy', _from: docs[0]._id, _to: revised._id}, now),

addTimestamps({type: 'proxy', _from: revised._id, _to: docs[2]._id}, now)

];

print(rel.save(edges));

trx.commit();

}We can then check the current version of the server document by time-slicing.

{

const query = `

FOR s IN proxy_in

FILTER s.id == @serverId

FOR v, e, p IN 1..1 OUTBOUND s relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

FILTER p.edges[*].created ALL <= @queryTime && p.edges[*].expired ALL > @queryTime

RETURN v

`;

const params = {

serverId: 'f8a3075e-beea-467c-879f-01eed361fd5b',

queryTime: Date.now()

};

db._query(query, params).toArray();

}This should return something like the following.

[

{

"_key" : "189",

"_id" : "servers/189",

"_rev" : "_Z-TXTFu---",

"id" : "f8a3075e-beea-467c-879f-01eed361fd5b",

"ip" : "10.10.10.42"

}

]However, we can check for all revisions by removing the time-slicing.

{

const query = `

FOR s IN proxy_in

FILTER s.id == @serverId

FOR v, e, p IN 1..1 OUTBOUND s relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

RETURN v

`;

const params = {

serverId: 'f8a3075e-beea-467c-879f-01eed361fd5b'

};

db._query(query, params).toArray();

}This should return something like the following.

[

{

"_key" : "126",

"_id" : "servers/126",

"_rev" : "_Z-TXTDq---",

"id" : "f8a3075e-beea-467c-879f-01eed361fd5b"

},

{

"_key" : "189",

"_id" : "servers/189",

"_rev" : "_Z-TXTFu---",

"id" : "f8a3075e-beea-467c-879f-01eed361fd5b",

"ip" : "10.10.10.42"

}

]

Putting It All Together

Now let’s add another server and some drives to go with it.

{

const trx = db._createTransaction({ collections: {

write: ['proxy_in', 'proxy_out', 'servers', 'drives', 'relations'],

read: ['models']

}});

const now = Date.now();

const pin = trx.collection('proxy_in');

const pout = trx.collection('proxy_out');

const cs = trx.collection('servers');

const cd = trx.collection('drives');

const rel = trx.collection('relations');

const serversRaw = [

{id: 'a9014a33-6876-467e-9f19-3e803def7b93'}

];

const servers = cs.save(serversRaw);

const serversIn = pin.save(makeProxies(serversRaw));

const serversOut = pout.save(makeProxies(serversRaw));

rel.save(joinSets(serversIn, servers, addTimestamps({type: 'proxy'}, now)));

rel.save(joinSets(servers, serversOut, addTimestamps({type: 'proxy'}, now)));

const modelQuery = `

FOR m IN models

FOR v, e IN 1..1 INBOUND m relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

FILTER e.created <= @queryTime && e.expired > @queryTime

return v

`;

const modelParams = {queryTime: now};

const modelsIn = trx.query(modelQuery, modelParams).toArray();

const drivesRaw = [

{id: '45a5d918-b074-4dc7-ad7d-94ab7dbb0ee3'},

{id: 'bc21fe6f-68e1-423f-a073-c64a1a83ddb9'},

];

const drives = cd.save(drivesRaw);

const drivesIn = pin.save(makeProxies(drivesRaw));

const drivesOut = pout.save(makeProxies(drivesRaw));

rel.save(joinSets(drivesIn, drives, addTimestamps({type: 'proxy'}, now)));

rel.save(joinSets(drives, drivesOut, addTimestamps({type: 'proxy'}, now)));

// one drive of each model

const modelRelations = [

addTimestamps({type: 'instance_of', _from: drivesOut[0]._id, _to: modelsIn[0]._id}),

addTimestamps({type: 'instance_of', _from: drivesOut[1]._id, _to: modelsIn[1]._id}),

];

rel.save(modelRelations);

const serverRelations = [

addTimestamps({type: 'contains', _from: serversOut[0]._id, _to: drivesIn[0]._id}),

addTimestamps({type: 'contains', _from: serversOut[0]._id, _to: drivesIn[1]._id}),

];

rel.save(serverRelations);

trx.commit();

}Now suppose we register a failure in one of these drives. We want to log the event and remove the failed drive from the datacenter.

const expireEdge = (trx, sourceId, targetId, expired) => {

if (expired === undefined || expired === null) {

expired = Date.now();

}

const query = `

FOR pin IN proxy_in

FILTER pin.id == @sourceId

FOR v, e IN 1..1 OUTBOUND pin relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

FILTER v.id == @targetId

FILTER e.expired ALL == @maxTimestamp

UPDATE e WITH {expired: @expired} IN relations

`;

const params = {

expired,

maxTimestamp: Number.MAX_SAFE_INTEGER,

sourceId,

targetId

};

trx.query(query, params);

};

{

const trx = db._createTransaction({ collections: {

write: ['proxy_in', 'proxy_out', 'events', 'relations'],

read: ['servers', 'drives']

}});

const now = Date.now();

const pin = trx.collection('proxy_in');

const pout = trx.collection('proxy_out');

const ce = trx.collection('events');

const rel = trx.collection('relations');

const eventsRaw = [

{

type: 'drive_failure',

id: 'bfa5a652-4b53-4865-bdb4-5b33e1aa72e5',

server_id: 'a9014a33-6876-467e-9f19-3e803def7b93',

drive_id: '45a5d918-b074-4dc7-ad7d-94ab7dbb0ee3'

}

];

const events = ce.save(eventsRaw);

const eventsIn = pin.save(makeProxies(eventsRaw));

const eventsOut = pout.save(makeProxies(eventsRaw));

rel.save(joinSets(eventsIn, events, addTimestamps({type: 'proxy'}, now)));

rel.save(joinSets(events, eventsOut, addTimestamps({type: 'proxy'}, now)));

const serverQuery = `

FOR s IN servers

FILTER s.id == @serverId

FOR v, e IN 1..1 OUTBOUND s relations

FILTER e.created <= @queryTime && e.expired > @queryTime

return v

`;

const serverParams = {

queryTime: now,

serverId: 'a9014a33-6876-467e-9f19-3e803def7b93'

};

const serversOut = trx.query(serverQuery, serverParams).toArray();

// link the event to the server

const serverRelations = [

addTimestamps({type: 'contains', _from: serversOut[0]._id, _to: eventsIn[0]._id}),

];

rel.save(serverRelations);

// expire the drive

expire(trx, ['45a5d918-b074-4dc7-ad7d-94ab7dbb0ee3'], now);

// expire the edge linking the drive to its server

expireEdge(trx, 'a9014a33-6876-467e-9f19-3e803def7b93', '45a5d918-b074-4dc7-ad7d-94ab7dbb0ee3', now)

trx.commit();

}Suppose we want to follow up and check which servers still have instances of this same drive model which failed.

{

const query = `

FOR event IN events

FILTER event.id == @eventId

FOR server, e1, p1 IN 3..3 INBOUND event relations

FILTER p1.edges[*].created ALL <= @queryTime && p1.edges[*].expired ALL > @queryTime

FOR model, e2, p2 IN 6..6 OUTBOUND server relations

FILTER p2.vertices[3].id == event.drive_id

FOR vulnerable, e3, p3 IN 6..6 INBOUND model relations

OPTIONS {bfs: true, uniqueVertices: 'global'}

FILTER p3.edges[*].created ALL <= @queryTime && p3.edges[*].expired ALL > @queryTime

RETURN vulnerable

`;

const params = {

eventId: 'bfa5a652-4b53-4865-bdb4-5b33e1aa72e5',

queryTime: Date.now()

};

db._query(query, params).toArray();

}This should return our (previously-modified) server.

[

{

"_key" : "189",

"_id" : "servers/189",

"_rev" : "_Z-TXTFu---",

"id" : "f8a3075e-beea-467c-879f-01eed361fd5b",

"ip" : "10.10.10.42"

}

]Wrapping Up

As you can see by going through this time traveling with graph databases tutorial, maintaining revision history can be accomplished with ArangoDB in a relatively straightforward fashion. With appropriately defined indices, it should be quite performant as well.

Unfortunately, it does add some overhead to application development. Luckily, much of this can be abstracted away into some helper methods. I have chosen to show some of this abstraction above to help but did not develop a full library-like solution. The examples above help to demonstrate well the graph structures that result from copy-on-write semantics and should hopefully help you understand well enough to start working on your own solutions.

If you wish to explore the topic in further detail, please check out our earlier article on the subject. We love to hear about what others are doing with ArangoDB, so feel free to drop by our community Slack and share your story or get some help!

Skip to content

Skip to content